Introducing RedGraph: The World’s First Attack Surface Mapping & Continuous Testing for AI Agents

Introducing RedGraph: Attack Surface Mapping & Testing for AI Agents

Read

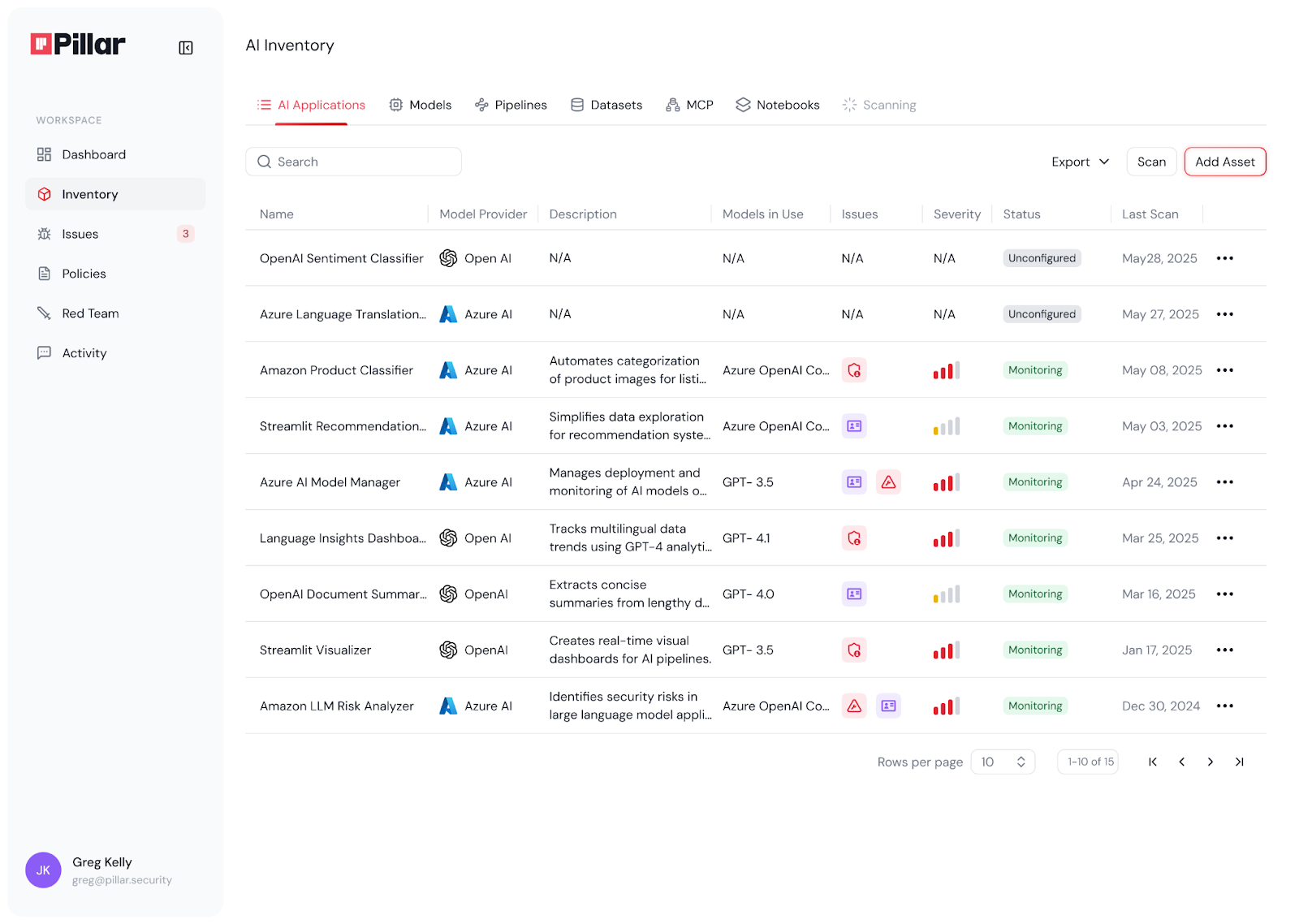

Your organization is building on top of AI faster than you think. A data science team spins up a sentiment analysis model in a Jupyter notebook. Marketing deploys a ChatGPT-powered chatbot through a third-party tool. Product builds a homegrown agent that combines an LLM with your internal APIs to automate customer support workflows.Engineering integrates Claude into the CI/CD pipeline. Finance experiments with a custom forecasting model in Python.

Each of these represents an AI asset. And like most enterprises going through rapid AI adoption, there's often limited visibility into the full scope of AI deployments across different teams.

As AI assets sprawl across organizations, the question isn't whether you have Shadow AI - it's how much Shadow AI you have. And the first step to managing it is knowing it exists.

This is where AI Asset Inventory comes in.

AI Asset Inventory is a comprehensive catalog of all AI-related assets in your organization. Think of it as your AI Bill of Materials (AI-BOM) - a living registry that answers critical questions:

Without this visibility, you're flying blind. You can't secure what you don't know exists. You can't govern what you haven't cataloged. You can't manage risk in assets that aren't tracked.

Unlike traditional software, AI assets are uniquely difficult to track:

Diverse Asset Types: AI isn't just models. It's training datasets, inference endpoints, system prompts, vector databases, fine-tuning pipelines, ML frameworks, coding agents, MCP servers and more. Each requires different discovery approaches.

Decentralized Development: AI development happens across multiple teams, tools, and environments. A single project might span Jupyter notebooks in development, models in cloud ML platforms, APIs in production, and agents in SaaS tools.

Rapid Experimentation: Data scientists create and abandon dozens of experimental models. Many never make it to production, but they may still process sensitive data or contain vulnerabilities.

Shadow AI: Business units increasingly deploy AI solutions without going through IT or security review - from ChatGPT plugins to no-code AI platforms to embedded AI in SaaS applications.

Different AI sources carry different risks. A third-party API, an open-source model, and your internal training pipeline each present unique security challenges. Understanding these source-specific risks is critical for prioritizing your governance efforts. Let's examine some of them:

Supply Chain Risks: Development teams import pre-trained models and libraries from public repositories like Hugging Face and PyPI. These dependencies may contain backdoors, malicious code, or vulnerable components that affect every model using them.

Data Poisoning Risks: Training notebooks often pull datasets from public sources without validation. Attackers can inject poisoned samples into public datasets or compromise internal data pipelines, causing models to learn incorrect patterns or embed hidden backdoors.

Security Misconfigurations: Jupyter notebooks containing sensitive credentials exposed to the internet. Development environments with overly permissive access controls. API keys hardcoded in training scripts. Model endpoints deployed without authentication. Each represents a potential entry point that traditional security tools may miss because they're focused on production infrastructure, not experimental AI environments.

Model Theft & Exfiltration: Proprietary models stored in cloud platforms become targets for theft. Misconfigured storage buckets or overly permissive IAM roles can expose valuable IP, while attackers can extract models through repeated queries to exposed endpoints.

Supply Chain Risks: Cloud marketplaces provide pre-built models and containers from third-party vendors that may contain outdated dependencies, licensing violations, or malicious modifications—often deployed without security review.

Data Leakage Risks: Sending sensitive data to external APIs like OpenAI or Anthropic means losing control over that data. Without proper agreements, proprietary information may be used to train external models or exposed through provider breaches.

Prompt Injection Risks: Applications using LLM APIs are vulnerable to prompt injection attacks where malicious users manipulate prompts to extract sensitive information, bypass controls, or cause unintended behaviors.

Shadow AI Proliferation: Business units enable AI features in CRM tools and marketing platforms without security review. These AI capabilities may process sensitive customer data, financial information, or trade secrets outside IT visibility.

Data Residency & Compliance Risks: Embedded AI features may send data to different geographic regions or subprocessors, creating compliance issues for organizations subject to GDPR, HIPAA, or data localization requirements.

Creating an effective AI Asset Inventory requires systematic discovery across your entire technology landscape. Here's how to approach it:

Connect with your code repositories to identify AI/ML activity:

Integrate with platforms where AI workloads run:

Monitor actual AI usage in production:

Your AI Asset Inventory should be more than a simple spreadsheet. It needs to be a living system that tracks:

.png)

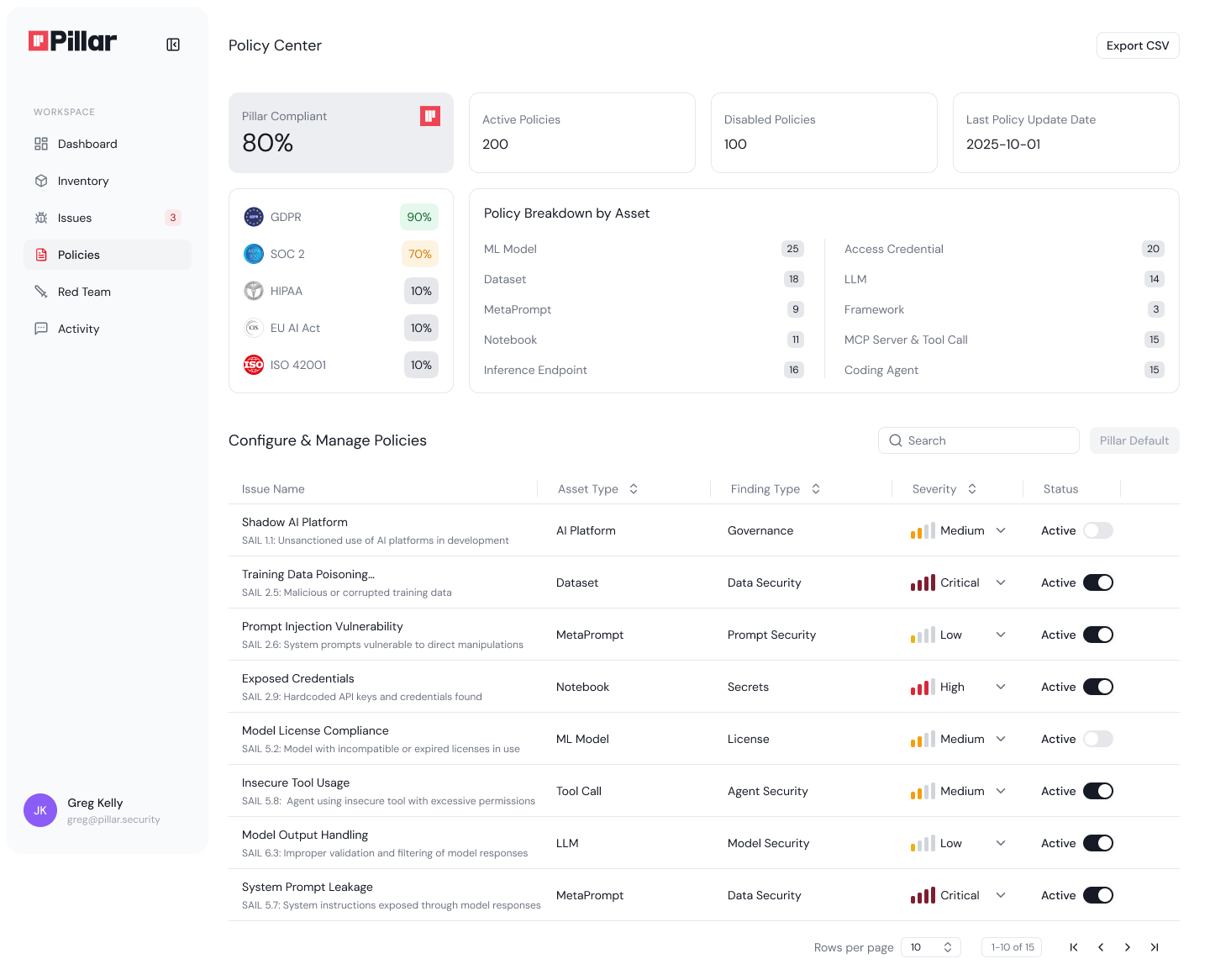

AI Asset Inventory is the foundation, but it's not the end goal. Once you have visibility, you can:

Pillar Security provides comprehensive AI discovery and security posture management capabilities that address AI sprawl at its core.

Pillar's AI Discovery automatically identifies and catalogs all AI assets across your organization—from models and training pipelines to libraries, meta-prompts, MCP servers, agentic frameworks and datasets. By integrating with code repositories, data platforms, and AI/ML infrastructure, Pillar continuously scans and maintains a real-time inventory of your entire AI footprint, capturing the AI-specific components that traditional code scanners and DevSecOps tools miss.

Beyond discovery, Pillar's AI Security Posture Management (AI-SPM) conducts deep risk analysis of identified assets and maps the complex interconnections between AI components. Our analysis engines examine both code and runtime behavior to visualize your AI ecosystem, including emerging agentic systems and their attack surfaces. This helps teams identify critical risks like model poisoning, data contamination, and supply chain vulnerabilities that are unique to AI pipelines.

Discovering and cataloging AI assets is just the beginning. Pillar Security transforms that visibility into active protection through continuous testing and runtime enforcement.

Pillar runs simulated attacks tailored to your AI system's use case—from prompt injections and jailbreaking to sophisticated business logic exploits. Red teaming evaluates whether AI agents can be manipulated into unauthorized refunds, data leaks, or unintended tool actions, testing not just the model but the entire agentic application and its integrations.

For tool-enabled agents, Pillar integrates threat modeling with dynamic tool activation, testing how chained API calls might be weaponized in realistic attack scenarios. For third-party AI apps like copilots or embedded chatbots, Pillar offers black-box red teaming—requiring only a URL and credentials to stress-test any accessible AI application and uncover exposure risks.

Red teaming reveals vulnerabilities, but runtime guardrails prevent them from being exploited in production. Pillar's runtime controls monitor inputs and outputs to enforce security policies without interrupting performance. Unlike static rules, these model-agnostic, application-centric guardrails continuously evolve using telemetry data, red teaming insights, and threat intelligence.

Guardrails adapt to each application's business logic, preventing misuse like data exfiltration or unintended actions while preserving intended AI behavior. Organizations can enforce custom policies and conduct rules across all AI applications with precision—closing the loop from discovery to assessment to active protection.

Ready to gain control of your AI Asset Inventory? Learn how Pillar Security can help you discover, assess, and secure your AI assets at scale.

Subscribe and get the latest security updates

Back to blog