Pillar has assembled the world's brightest minds from military intelligence and enterprise security to dismantle emerging threats in the new AI landscape. Our team’s expertise fuses deep offensive roots in traditional security (AppSec, Cloud, OS, Malware) with Frontier AI disciplines (Data Science, AI, Machine Learning). This hybrid DNA allows us to deconstruct complex attacks that cross the boundary between code, infrastructure, and autonomous systems.

Adversarial AI Research

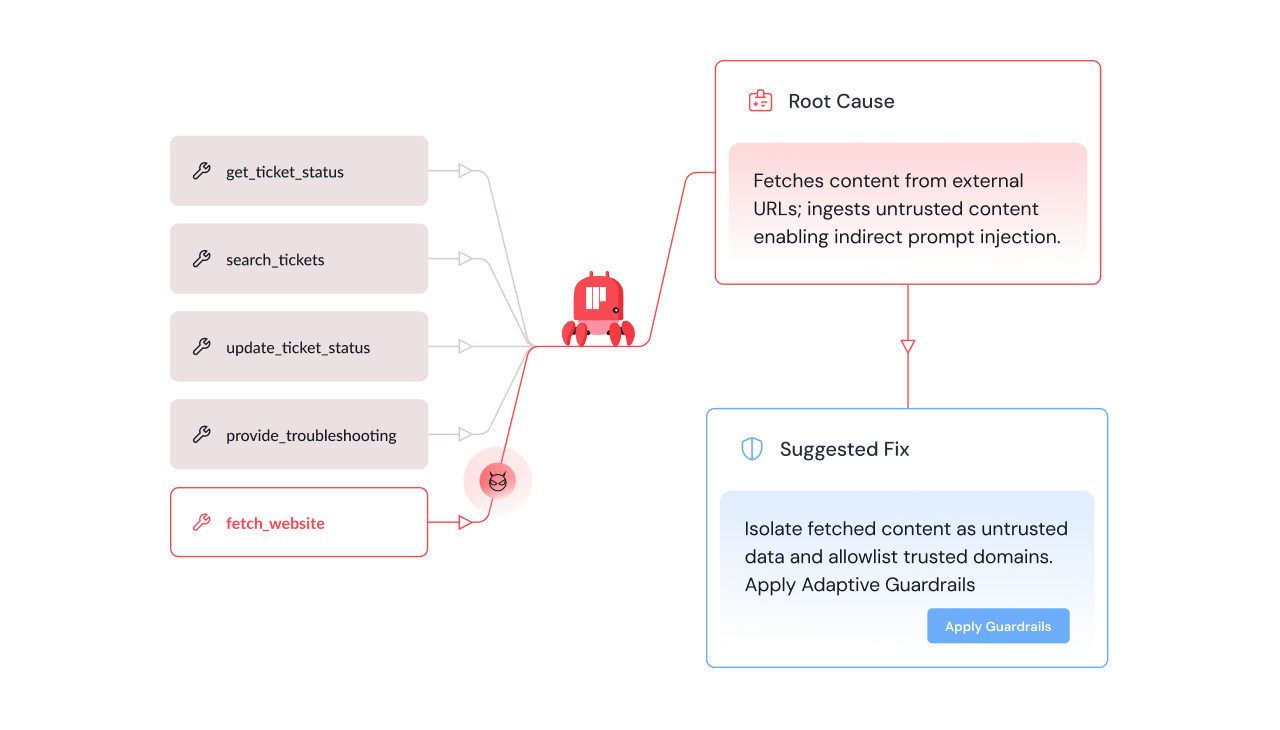

Dissects Foundation Models and agents to identify zero-day vulnerabilities, novel jailbreaks, and prompt injection techniques.

Threat Intelligence

Monitors the wild for emerging trends in how attackers are weaponizing AI, delivering proactive insights rather than reactive alerts.

Red Teaming Operations

Simulates sophisticated attacks on AI pipelines to validate defenses and expose logic gaps that standard tools miss.

Architecting Enterprise Defense

Translates research into product capabilities, building next-gen features like the Safe MCP Registry and integrated Threat Intel feeds to secure the future of AI work.

Open Source & Supply Chain Security

Actively hunts for vulnerabilities in the open-source AI ecosystem—from coding agents to model hubs—to harden the community tools that enterprises rely on.

Our research team have identified & reported security vulnerabilities in the most popular coding agents, IDEs, model hubs and agentic workflow platforms.

What we discovered

Our threat intelligence team discovered a complete LLMjacking supply chain. Attackers scan for exposed AI endpoints, validate access through systematic API testing, then resell discounted access to compromised infrastructure through commercial marketplaces operating on bulletproof hosting.

%20(1).png)

%20(1).png)

Between December 2025 and January 2026, Pillar Security Research honeypot mimicking exposed AI infrastructure observed real-world attack patterns. Over 40 days, we identified 35,000 attack sessions from three coordinated threat actors. This investigation reveals how cybercriminals discover, validate, and monetize unauthorized access to AI infrastructure through a coordinated supply chain spanning reconnaissance, validation, and commercial resale.

Meet the Pillar Research Team

Schedule a deep dive with the Pillar research team to learn about our latest research and findings. Get direct access to our experts and hear about the most novel attacks we are seeing in the wild

%20(1)%20(1).png)

.png)

%20(1).png)

.png)

.png)

%20(1).jpg)