Introducing RedGraph: The World’s First Attack Surface Mapping & Continuous Testing for AI Agents

Introducing RedGraph: Attack Surface Mapping & Testing for AI Agents

Read

Artificial Intelligence is fundamentally reshaping the technological landscape, introducing unprecedented opportunities alongside complex security challenges.

This blog highlights key AI security trends that will define 2025:

The AI landscape of 2025 stands at a critical inflection point. According to IEEE's latest global technology survey, AI dominates as the most important technology, with 58% of technology leaders predicting it will be the most significant area of tech in the year ahead. This overwhelming consensus reflects AI's unprecedented impact across industries, supported by projections of global AI spending reaching $200 billion by 2025.

However, this rapid advancement brings complex challenges. Organizations face a dual imperative: leveraging AI's transformative potential while ensuring robust security measures against evolving threats. The survey reveals that cybersecurity is top-of-mind for technology leaders, with 48% identifying real-time vulnerability detection and attack prevention as the primary use case for AI in 2025.

This blog explores six key security trends that will shape the AI landscape in 2025. Understanding these trends is crucial for organizations and individuals alike, as they will fundamentally impact how we approach AI security, compliance, and risk management in our increasingly AI-driven world.

AI tools are evolving fast. What started as simple chatbots with basic capabilities has now advanced to “copilots” that assist with more complex tasks, such as understanding and summarizing multiple documents, and ultimately to fully autonomous “AI agents.” These new AI agents, sometimes called “agentic AI,” are built to act independently to complete tasks and make decisions without constant human input.

.png)

Agentic AI combines multiple AI systems, allowing agents to plan, learn, sense their environment, and use tools to carry out different tasks. They can perform various tasks autonomously to meet specific goals and improve productivity. Gartner predicts that in 2028, these autonomous AI agents will make 15% of daily work decisions, up from 0% in 2024.

However, agentic AI brings with it new security challenges:

With these new challenges, we might also see the introduction of security frameworks specifically designed for agents. These frameworks will cater to the challenges mentioned above, such as streamlining security protocols related to all modules the agent will access.

As AI continues to evolve, the idea of a single AI agent is expanding into something much larger and collaborative – multi-agent system (MAS). This trend is gaining attention due to its potential to address complex tasks that require both autonomy and teamwork across various agents.

.png)

Unlike individual AI agents that operate independently, multi-agent systems (MAS) consist of multiple AI agents working together, each with specific roles and capabilities, to achieve a shared goal. These systems are valuable in scenarios where tasks are too complex or expensive to manage for a single AI agent.

Some frameworks for multi-agent systems include the following:

Security risks associated with MAS include the following:

The rise of small, efficient models is making advanced AI more accessible. Compact models bringpowerful capabilities to a broader audience. Unlike large models that require extensive computational resources, these smaller models can run on basic hardware, such as a single GPU. This enables developers, startups, academics, and small businesses to leverage AI without high costs.

.png)

This shift to small models and local deployment democratizes AI by lowering the resources needed for training and operation. With reduced costs and simpler requirements, a broad range of users now have access to powerful AI tools.

However, this trend brings specific security concerns:

A major step forward in AI capabilities is the recent beta release of Claude 3.5 Sonnet’s “computer use” feature, designed to operate a computer much like a human would. Through an API, developers can now instruct Claude to perform computer tasks such as moving a cursor, clicking buttons, typing text, and even analyzing screenshots. Once a command is set up, Claude can complete each action automatically.

.png)

This advancement is drawing attention across the tech world as it could redefine the scope of AI automation, allowing average users to offload even more digital tasks to AI systems. Claude’s computer-use feature is currently experimental and expected to evolve quickly as developers test and refine it.

Given this feature’s initial success, other companies are likely to release similar capabilities soon.

However, a few security concerns also arise with this feature:

With AI being used in highly regulated sectors like healthcare and finance, the need for tools to ensure responsible and ethical AI use is growing. AI governance platforms provide essential safeguards to help organizations maintain transparency, fairness, and accountability in AI operations.

.png)

By 2028, organizations implementing AI governance solutions are expected to see a 30% increase in customer trust and a 25% boost in regulatory compliance compared to their peers.

Several standards have been developed to guide organizations in the ethical and safe use of AI, such as:

AI governance solutions can benefit organizations in the following ways:

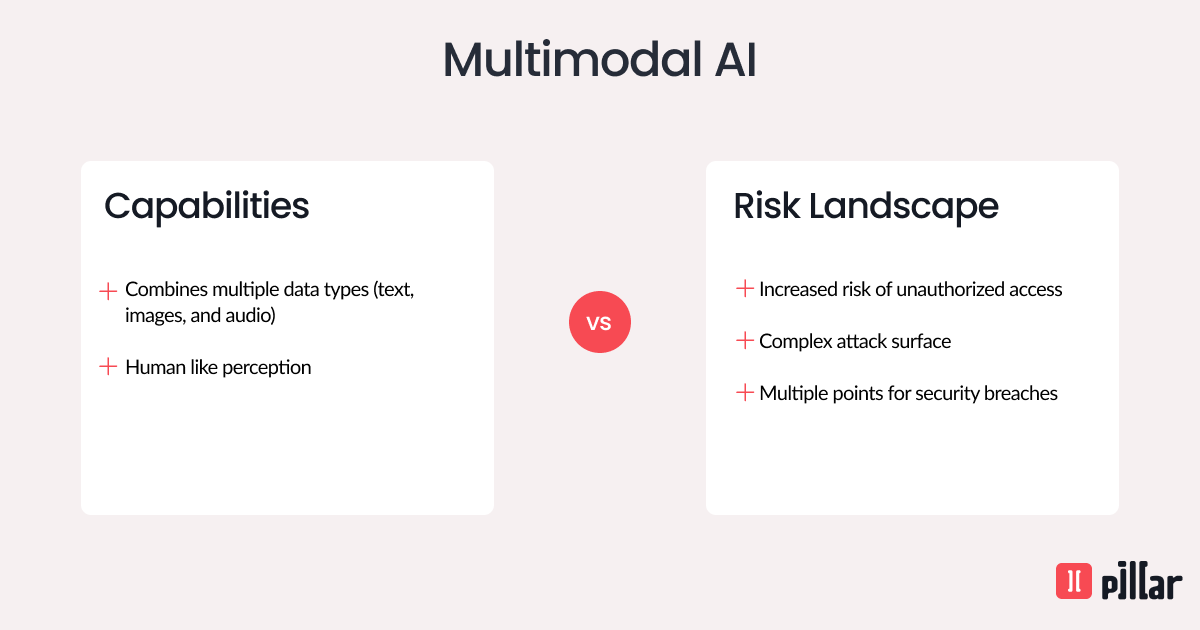

Multimodal AI is transforming how AI systems process and respond to the world. Unlike traditional AI, which handles only one data type, multi-modal AI can combine text, images, and audio inputs. This helps systems understand diverse information types together. This approach brings AI closer to human-like perception and enhances tools like virtual assistants and content creation apps.

Some leading frameworks in multi-modal AI include:

Multimodal frameworks drive new capabilities across industries. For example, multi-modal AI can analyze medical images alongside patient histories to improve diagnostic accuracy in healthcare. Moreover, it allows non-specialists to design or code, expanding roles without requiring specialized skills in the workplace.

However, multi-modal AI introduces specific security challenges:

The AI trends highlight both the exciting potential and risks of new AI developments. For companies, success in 2025 will depend on their ability to implement comprehensive security strategies that align with these evolving technologies. Organizations must act now to build security frameworks that can adapt to the rapidly changing AI landscape while maintaining operational efficiency.

By partnering with Pillar Security, organizations can ensure their AI implementations are protected by practical, enforceable security measures that go beyond theoretical frameworks. Our comprehensive approach to testing and validation enables leading companies to deploy AI solutions that remain secure and trustworthy in real-world applications, aligning with the evolving security demands of 2025 and beyond.

Subscribe and get the latest security updates

Back to blog