Introducing RedGraph: The World’s First Attack Surface Mapping & Continuous Testing for AI Agents

Introducing RedGraph: Attack Surface Mapping & Testing for AI Agents

Read

Specialized AI agents are increasingly being deployed to automate complex, industry-specific workflows. Equipped with multiple tools and platform integrations, these agents can operate across different systems with minimal human oversight.

But here’s the catch: even when individual tools are secure in isolation, combining them can create new, unexpected vulnerabilities- a phenomenon we call . This “toxic combination” effect has the potential to amplify the blast radius of a breach dramatically.

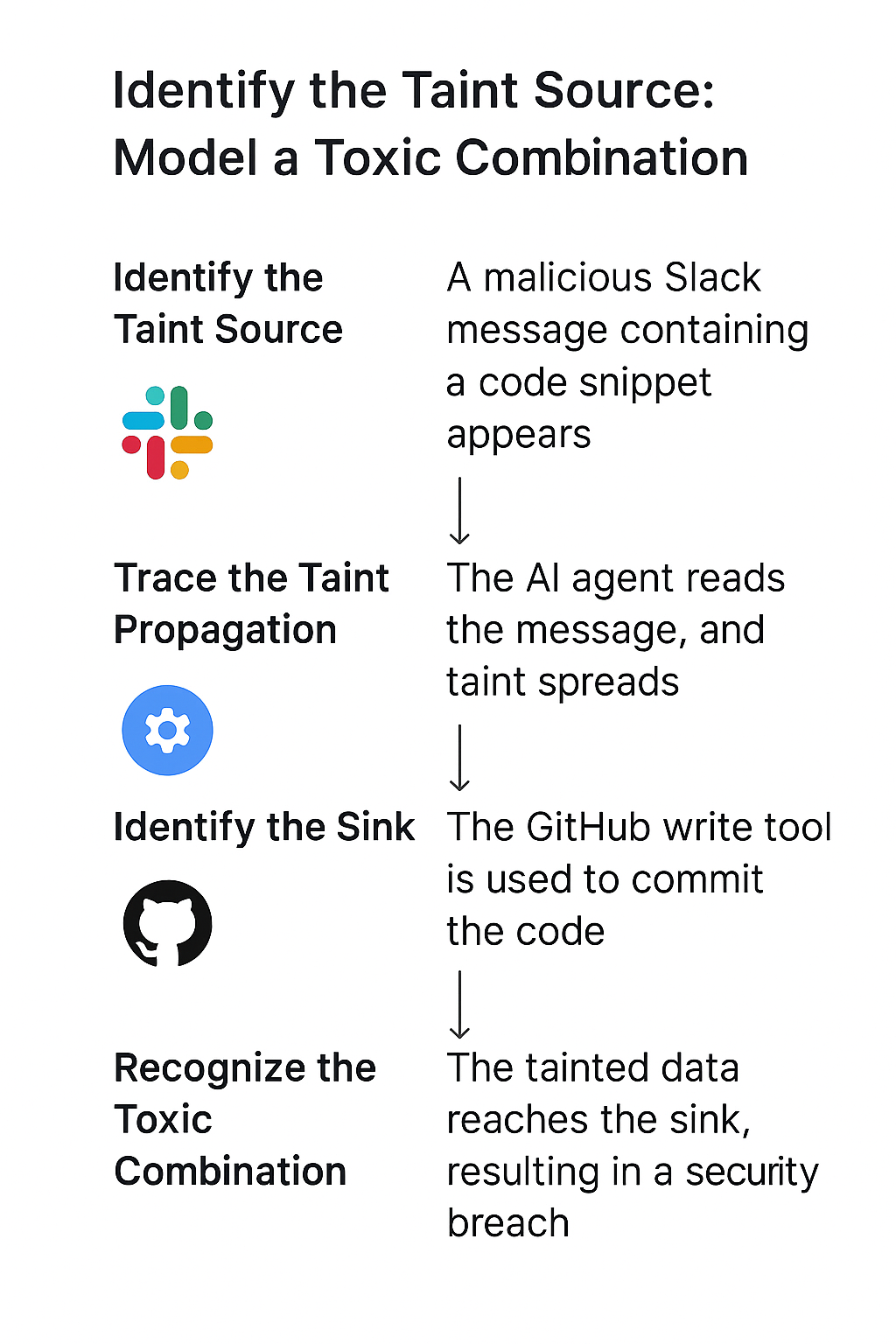

In this blog, we’ll explore how to adapt a proven cybersecurity technique—taint analysis—to model and mitigate these risks before they lead to costly incidents.

Taint analysis is a technique for tracking untrusted data as it flows through a system. It starts by marking (“tainting”) any data from untrusted sources—think user input, third-party APIs, or external messages. As the data moves through the system, the taint follows it.

The goal? To see if tainted data ever reaches a sink - a sensitive operation like executing a system command, writing to a database, or committing code - where it could cause real harm.

Vertical AI agents are tailored for specific industries, using specialized tools to execute highly autonomous workflows. This autonomy is a double-edged sword: while it boosts efficiency, it also increases the potential for unintended and dangerous interactions between capabilities.

Vertical agentic risk emerges when individually safe tools become risky in sequence—forming a toxic combination that attackers can exploit.

Consider an AI agent designed for a software development company with two primary tools:

On their own, these tools seem to pose a limited threat. The Slack tool can only observe, and the GitHub tool is meant for development tasks. The vertical agentic risk, however, lies in the potential for these tools to be chained together in a malicious sequence.

Here is how taint analysis can be used to model and understand this threat:

Threat modeling for AI systems is an evolving discipline, with frameworks like STRIDE and those from MITRE providing a foundation. However, the dynamic and autonomous nature of agentic AI requires a continuous and adaptive approach to security. Once a potential toxic combination is identified through taint analysis, several mitigation strategies can be employed:

In conclusion, as vertical AI agents become more integrated into business-critical workflows, understanding and mitigating the associated risks is paramount. Taint analysis offers a powerful and intuitive framework for modeling how seemingly benign tools can be combined to create significant security threats. By systematically tracing the flow of data and identifying these toxic combinations, organizations can implement targeted controls to secure their agentic AI systems and safely harness their transformative potential.

Want to see a live demo on how we identify and mitigate vertical agentic risks? Talk to us.

Subscribe and get the latest security updates

Back to blog